Why I’m dabbling in AI

One of the hardest parts of working in the creative arts is being able to make the jump when technology changes. Sometimes it’s a change of software; Final Cut to Premiere or Quark to InDesign. Sometimes it’s hardware; from film to digital, or from big cameras to DSLR’s to phones. Sometimes it’s a change in what audiences want; from website videos to Tik Tok.

The challenge of course is that you never know what is going to be the next leap forward, and what is going to be a jump into obsolesence. Have you learnt to how to make great vertical videos…or are you now the proud owner of a $10K steadicam rig that lies dormant while other people use a $300 gimbal.

These choices are amplified as you get older, as you normally have a number of existing responsibilities, and so following one of these new ideas isn’t so much ‘a chance to learn for the sake of learning’ as it is something that you’re going to have to make sacrifices in another area of life in order to accomodate this new interest.

Listening to Chris Marquardt on his ‘Tips From The Top Floor’ photography podcast got me thinking about Artificial Intelligence (AI) in photography…and wondering if this could be the next big leap.

So what is AI photography?

Ever wondered what an angry avacodo on a skateboard in Paris would look like? Well AI can create multiple versions of that. And if you also want to see what that would look like if Rembrandt had painted it…or if it was in a White Stripes video clip, or if H R Giger had created it while using Ketamine and drinking Pink Rabbits…AI can create that as well.

It basically takes a massive number of images and uses machine learning to create artwork based on whatever prompts you put it.

Clearly the success of this is based on:

a) the images the machine learning has access to,

b) the ability of the user to create prompts that the machine learning understands, and,

c) the processing power and intelligence of the machine learning to create something that is actually what the user is after.

Parts a) and c) are clearly the domain of the AI tool that you’re using…but the ability to write prompts that it can use, is a skill you can learn…and so that’s what I set about doing.

Using Astria.ai

The platform I went with was Astria.ai as it was one of the more user friendly options for those of us who can’t code.

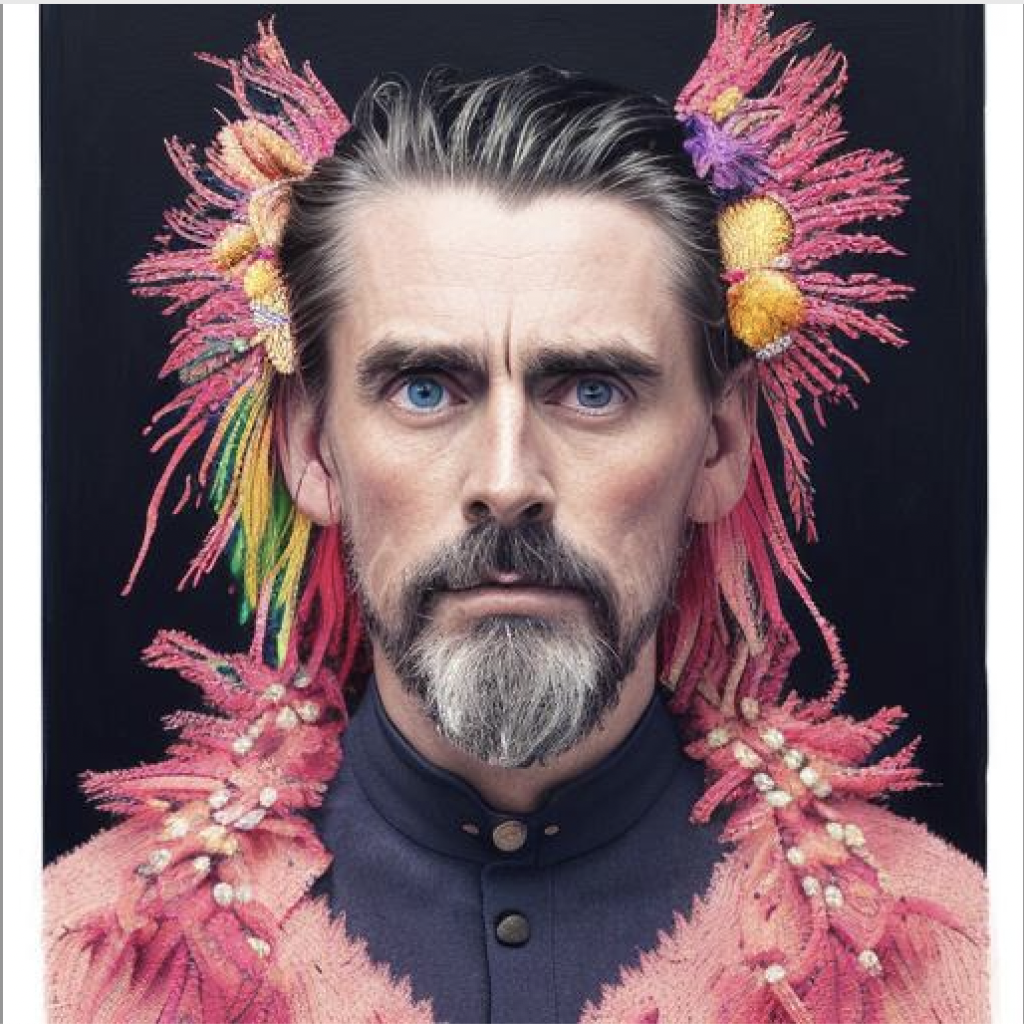

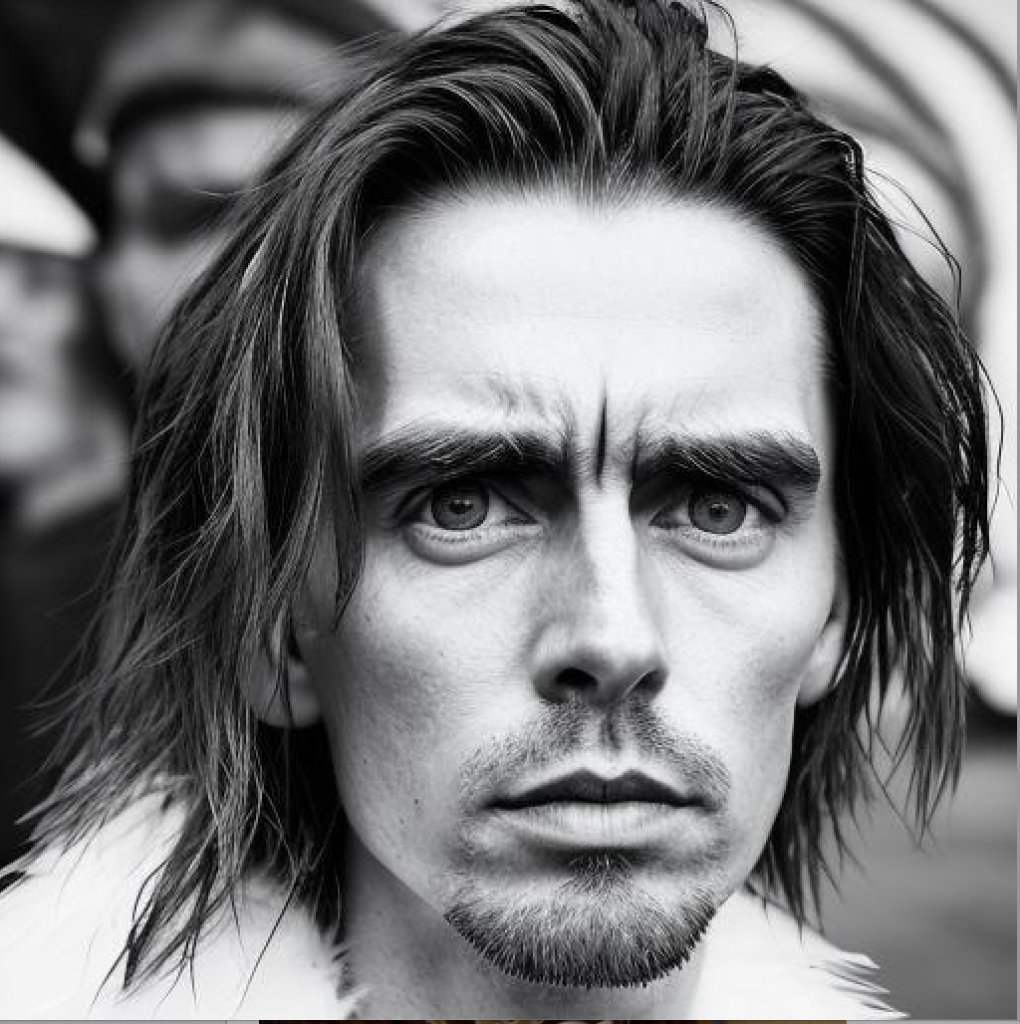

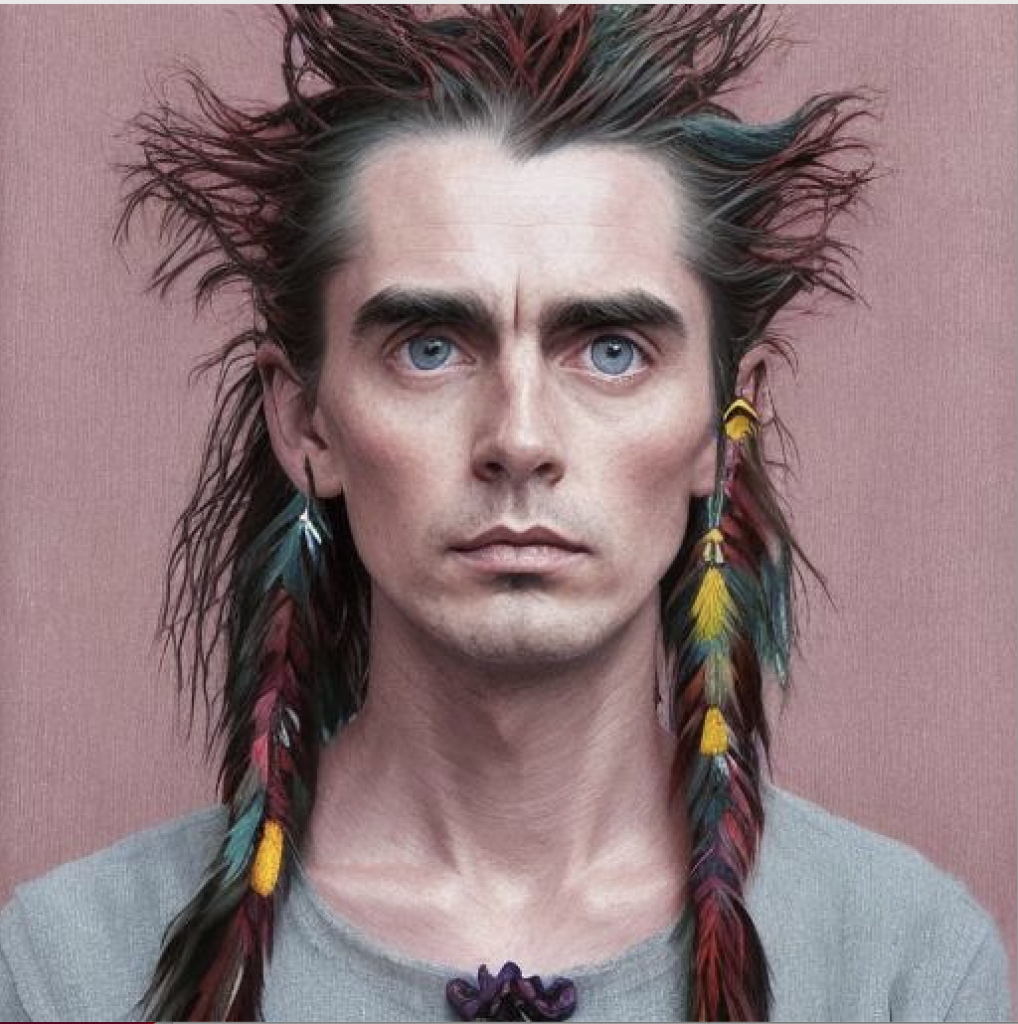

I uploaded about 15 photos of me from my phone, from a variety of angles and in a variety of environments, and then let the tool use some of its default prompts to create some images of me.

I think it’s fair to say my expectations were pretty low, most of the examples I had seen to this point were on social media, and were very much of the ‘Ermagerd! What is even happening with this?!’ variety. So I was genuinely surprised when at least three of the images made me think ‘I wish I’d taken that photo!’

Now, was this because they made me look about 15 years younger and with cheekbones you could juice an orange on? Yes…that certainly didn’t hurt.

But ultimately, I actually really liked the way they looked, and I have to stress, this wasn’t a case of just taking one of my images and putting it in a different context…none of these images of my face existed before, let alone the feathers and accouterments that accompanied them!

But what does this mean for photography?

Once I got past the ‘Machine learning does the darnedest things!’ stage, I started to think about what it meant for one part of photography that I love – portraiture.

At its most base form, when I take a portrait of someone, I bring together a range of elements (the person, the environment, the lighting), capture them with a machine (a digital camera), and then use software to bring that photo to life (adjust the contrast, make it black and white, add a vignette, etc).

Is that really so different from what this AI tool had just done?

What would happen if I entered one of these photos in a portrait competition?

What it the line between ‘digitally enhanced’ and ‘artificially created’?

I didn’t actually know…but it did give me a great idea for a portrait!!

The portrait

Any time I look at the work of great portrait photographer (Simon Schluter…I’m looking at you!) I’m always really impressed by the way they can build an image from the ground up in order to tell a story.

I’m very comfortable just capturing an image of someone and hoping it tells a story, but actually setting out from the get go to tell a specific story with a photo, and building everything around it…that’s really not a strength I have.

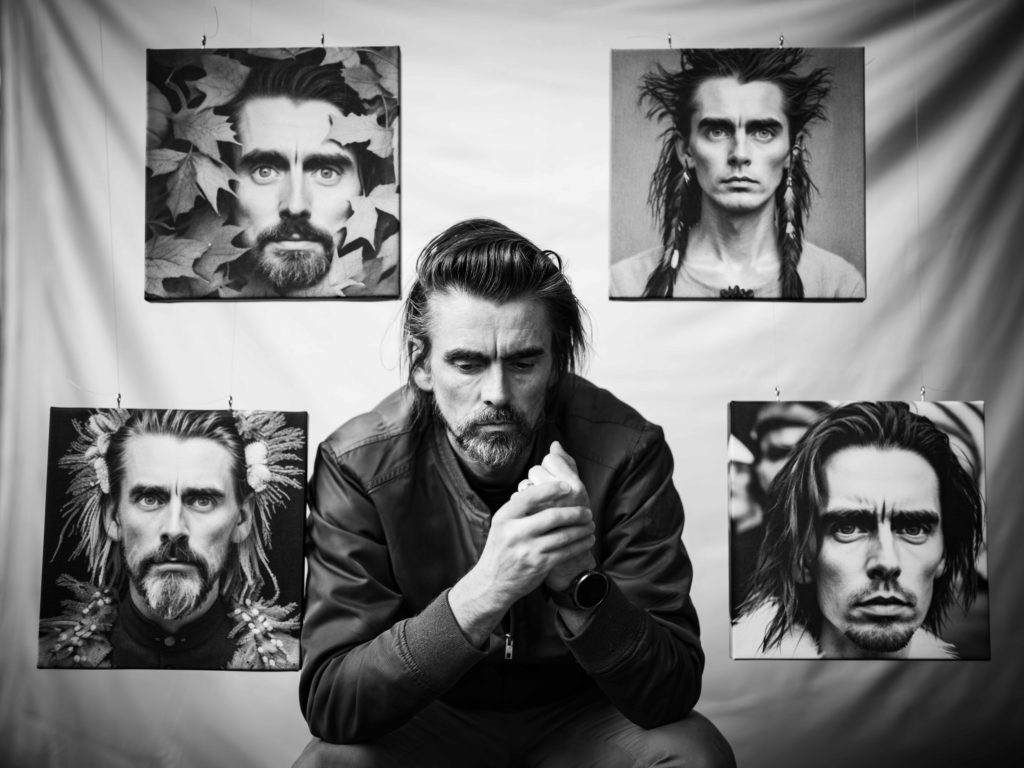

But I suddenly had a vision of an image where I was surrounded by the AI portraits of me, as a reflection of what I was wondering about what the future held as a photographer. When I came up with the idea of the title ‘Self portrAIt’…I knew I had to make this happen.

The first step was to get a selection of the AI portraits printed in a way that I could use for a photo. I went with canvas prints with a wooden frame so that I could stand them up, or hang them from something.

Next step was to work out a background. In my dreams it was a big, austere room with the photos suspended around me…given the complete absence of large austere rooms available with a budget of $0, I settled for a white sheet suspended behind me in our backyard, with the photos suspended from the monkey bars the kids used to play on.

Artistically, I was going to shoot with my trusty softbox so that I could make it look dramatic by just picking me and the photos up with the flash, while everything else fell off to black/grey.

Technically, I was going to shoot it on the GFX 100S I had on loan from Fuji for another project…and the GF32-64mmF4 lens (equivalent to a wide angle lens on a full frame camera).

Cool plan…so how did it go?

As you would expect…badly. First of all, screwing little hooks through canvas into wooden frames is about as much fun as it sounds…but perhaps more importantly, securing these frames to monkey-bars via fishing line is a freaking nightmare, and the fishing line just cuts through masking tape, and was slipping through the electrical tape we had. It is only through the patience of Josh (my eldest son) and the wonders of gaffer tape that were were able to suspend them where we wanted them.

The next weird problem was that the wide-angled lens that I had thought would be perfect…was actually too wide, and was showing a lot more of the monkey bars and sheet than I had hoped. Fortunately I also had the GF80mm F1.7 lens to work with…and it was a freaking revelation!

Last but not least, having waited for the sun to go down sufficiently so that the white sheet background didn’t have any bright spots on it, and my flash wasn’t having to work overtime trying to knock out too much ambient light. My flash decided now would be the perfect time to ignore my wireless triggers, and not fire when I pressed the shutter.

* Insert gif from Brooklyn 99 of Peralta saying ‘Cool…cool, cool, cool’ *

So we reset the camera to work with the natural light, and Josh diligently took multiple photos while tried a variety of poses and facial expressions.

It’s a testament to my inability to self-direct facial expressions, and the frankly dazzling file sizes on the GFX100S that we managed to fill a 128GB card with photos that were roughly 5% different from each other!

The result

After going through hundreds of photos that felt like they were exactly the same photo…I came down to these as my faves.

Huge props go to Katie for getting me to actually interact with the pictures in that first one. Where most of my photoshop attempts look like bad photos…thanks to the fishing line, this photo was suddenly looking a bad photoshop. But actually getting my hands on them, showed that there weren’t just digitally inserted.

So now the million dollar question -Which is your favourite and why?